Re-Inventing Your Business with Emerging Tech

New technologies change rapidly, creating countless opportunities for your businesses to grow. We are an R&D company, dedicated to supporting you in using the latest tech and creating a lasting return for your business.

Fluid & Modular Know-How

End-to-End Support

Holistic Innovation Approach

Creating Impact

in Various Fields

Business Process Optimization

- Technology enables new ways to increase efficiency, speed and effectiveness in organizations.

- We enable tailored technology solutions aligned with your business goals.

Production Automation

- Maximize productivity, quality, and human potential for ultimate competitiveness and success.

- We create custom tech solutions, automating and optimizing your production for peak performance.

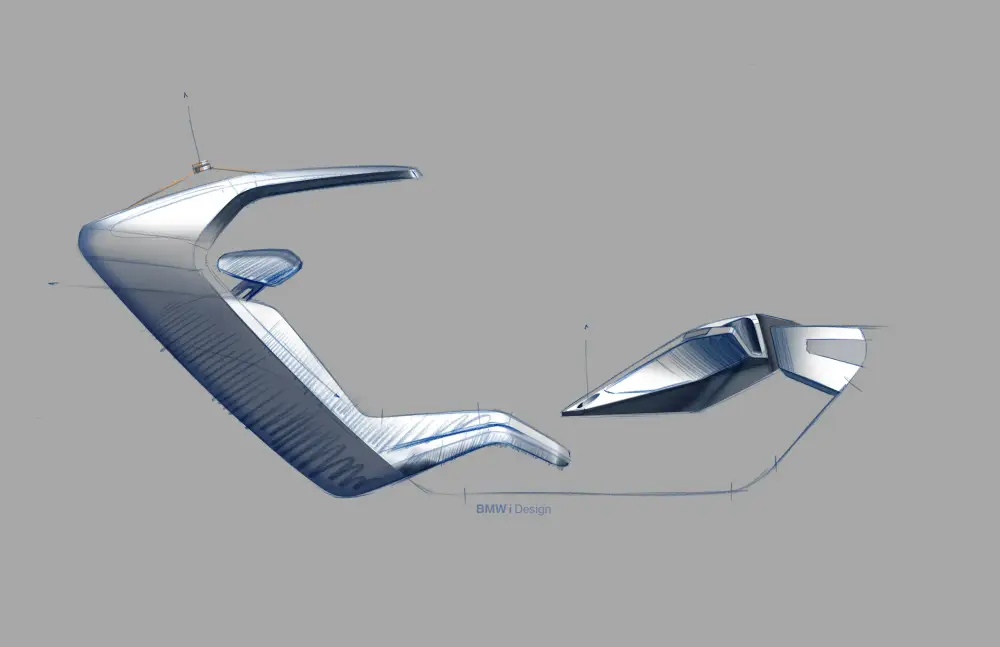

Product And Technology Innovation

- Pioneer product and tech innovations to enhance your competitiveness.

- We guide you through the entire tech innovation process.

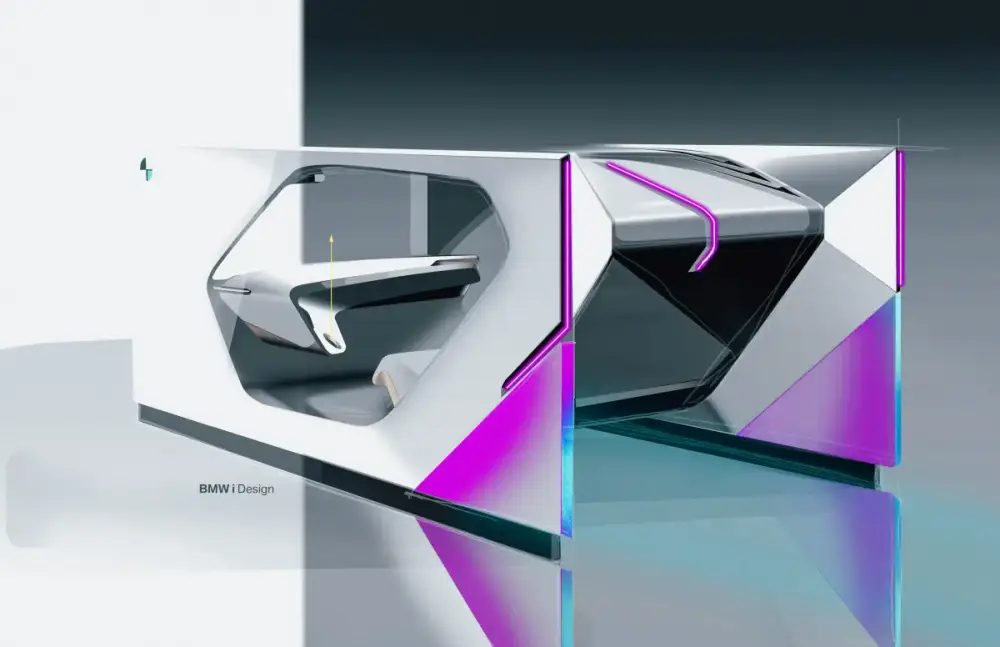

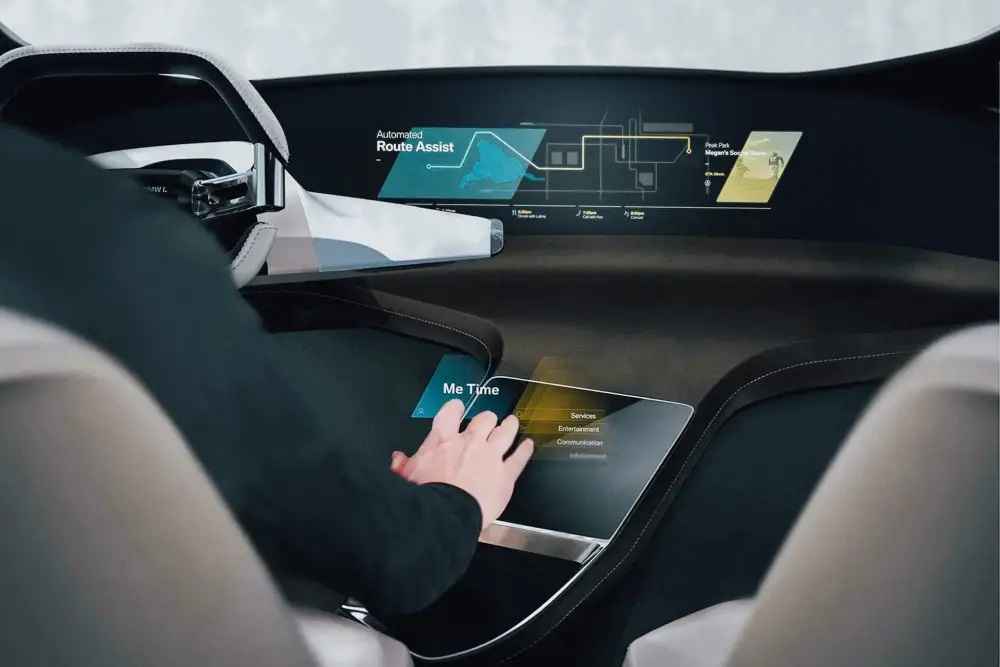

User Experience

- We build software, HMIs and XR solutions that will be loved by your customers.

- This enables new customer value and novel user experiences to drive business performance.

We Are

Re-Inventing R&D

Many companies are struggling to facilitate emerging technologies for actual value creation. With our interdisciplinary expertise in the fields of Production Automation, Product Innovation, Business Process Optimization and User Experiences, we bring emerging technologies to productive solutions.

Get Access to the Newest Technologies with Us

Fresh Updates

FAQs

Welcome to our FAQ section! Here, you can find a list of frequently asked questions to provide you with quick and helpful answers. If you can't find what you're looking for, feel free to reach out to us.

As an R&D company, Motius is specialized in solving challenges with emerging tech to create longlasting value for leading companies. We offer end-to-end support from innovation consulting to customized technical development. To do this, Motius leverages a unique fluid structure that allows the experienced core team to be flexibly complemented as needed with know-how from a talent pool of more than 900 tech experts. This allows the ideal project team to be assembled for each task - with the latest expertise in future technologies.

At Motius, we prioritize the utilization of cutting-edge technologies for highly innovative R&D projects encompassing various tech stacks. While fostering a startup culture within our office, we also strive to establish regular processes and structures in daily work organization. Apart from providing the opportunity of remote and flexible work, Motius offers an array of remarkable features to cultivate a pleasant company culture. Our team events and regular activities, such as Spikeball championships and Pubquizes, are an integral part of life at Motius. Additionally, we aim to be an adaptable employer, accommodating different life phases and diverse individuals, while fostering a strong sense of unity. Through our Flex to Grow program, we provide opportunities for part-time work, unpaid leave, or sabbaticals and we also support you in starting a family through our Parental Leave Program.

Technologies are subject to rapid change and open up numerous opportunities for companies to grow. However, many struggle to harness them for actual value creation. We support leading companies at integrating the latest technologies and creating sustainable profitability. With our interdisciplinary expertise in production automation, product innovation, business process optimization, and user experiences, we incorporate future technologies into productive solutions.

Mo-Fr 9:00-18:00. Closed on Saturday and Sunday.

At Motius, our permanent positions follow a structured three-step application process. It starts with a first interview conducted by one of our recruiters, followed by a technical interview and finally our Motius Experience Day. For our talent pool, the application process consists of two steps. First, there is a talent pool interview, during which we assess your suitability for the pool based on your skills, experience, and potential. If selected, the second step involves a technical interview specifically tailored to a particular project role.